Written by VSTE Professional Services member Kristina Peck

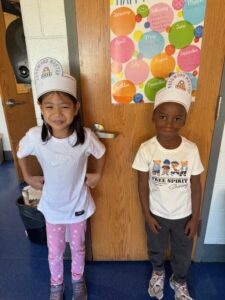

When most people think of “making” in a school, their minds often jump to 3D printers, robotics kits, or bins of craft supplies. But the maker movement is about more than just tools—it’s about a mindset. The maker mindset is a belief that every student is capable of creating, problem-solving, and innovating—all skills that are transferable to and needed in the workforce.

A successful maker-centered classroom isn’t built on fancy equipment—it’s built on trust, curiosity, and community. Students must feel safe taking risks and failing. The real work of bringing making into your classroom starts by building a culture of making, not just bringing in the physical tools.

Why Culture Comes First

When students work within a culture of making, they are free to explore, tinker, and try again. They can see failure as an opportunity to learn, instead of a conclusion. Students build confidence to share their ideas and the resilience to keep trying, even when things get hard - or break. So before you get out the supplies, you must start with the foundation first - a culture of making.

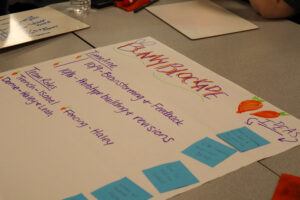

5 Ways to Build a Culture of Making

- Normalize Risk-Taking and Productive Failure - This can be as simple as changing the language of your classroom. Mistakes are now prototypes, and failure is now a learning opportunity. Celebrate risk-taking by having students highlight attempts that didn’t work yet but led to learning on a “favorite fail” board.

- Focus on Growth Over Perfection - Focus more on the process than the final project. Give students feedback as they work and provide opportunities for them to reflect. Try to spotlight effort, iteration, and reflection by letting students revise and resubmit. Trying to learn from the process should be expected, not extra.

- Build Psychological Safety - Create norms for respectful critiques that are

about the work - not the person. Teaching students how to be respectful when critiquing is important. One way to do this is to provide feedback routines like “I like…” or “I wonder…” to make sure peer review is supportive, not discouraging.

about the work - not the person. Teaching students how to be respectful when critiquing is important. One way to do this is to provide feedback routines like “I like…” or “I wonder…” to make sure peer review is supportive, not discouraging. - Foster Collaboration and Share Ownership - Students should feel like

everyone has something valuable to contribute. Each student comes in with their own strengths and abilities that can help the class work towards success. Design group challenges that allow students to demonstrate or use their strengths.

everyone has something valuable to contribute. Each student comes in with their own strengths and abilities that can help the class work towards success. Design group challenges that allow students to demonstrate or use their strengths. - Connect Making to Meaning - Make connections to the world around the students. Can you tie the projects to real-world challenges or personal interests? Can you bring in authentic audiences to see their work and provide feedback? Can you help students to see themselves as more than just kids, but as creators who can make an impact in their community?

A culture of making in a classroom doesn’t appear overnight. It’s built moment by moment—through the way we respond to mistakes, the language we use about learning, and the trust we cultivate in our classrooms.

When students feel safe, valued, and empowered, they’ll take the creative risks that real learning requires. And that’s when the maker mindset truly blooms.

Start with culture. The making will follow.